LLMs are coming to equity research. Learn how to adapt and prosper. A note from Hudson Labs CTO, Suhas Pai.

About the author: Suhas Pai is an expert in applied large language models who first started working in the NLP space in 2010. In addition to his role as co-founder and CTO at Hudson Labs Suhas is writing a book about large language models with O’Reilly Publishing. He is the chair at Toronto Machine Learning Summit (2021, 2022, 2023). He was also the privacy co-chair of BigScience, an open source project that released BLOOM, one of the world’s largest open-source language models.

It has been heartening to see all the recent advances in language models, spurred by the launch of ChatGPT and GPT-4 by OpenAI. The phrase ‘language model’ has finally been catapulted into the mainstream and at Hudson Labs, we couldn’t be more delighted.

Language models have been the foundation of our technology stack ever since Hudson Labs set up shop in 2020. Check out our blog for more information about our production models. We have been working on a financial Q&A assistant since mid-2022, akin to a financial variant of ChatGPT, long before ChatGPT was launched.

No doubt these LLMs (Large Language Models) have vastly expanded the realm of possibilities for novel use cases previously considered impossible. There are already several useful applications built on top of these technologies that are significantly enhancing human productivity. Is the same possible in the world of finance?

Financial use cases have high acceptability requirements. Key requirements include:

- Factuality - Output from AI systems should be fact-based and grounded in reality.

- Auditability - There should be a means to verify the truthfulness of outputs from AI systems (e.g. citations)

- Trustworthiness - The system should not fail in unexpected ways, and behave in a manner expected of it.

- Completeness - The system should be able to detect all data points of interest and be able to present the entire picture.

Even state-of-the-art LLMs, while excellent at understanding questions, summarization and other language tasks, suffer from ‘hallucination’ issues. The output they generate is not grounded in the real world and often contains factual inconsistencies. Sometimes, it is just a date or a number that is incorrect, sometimes the entire content generated is utter nonsense. For example, a law professor was falsely accused of sexual assault by ChatGPT, citing articles and other evidence that never existed. Here is GPT-4 providing a very incorrect definition of the phrase ‘critical audit matter’, and getting wildly confused about legal issues at Uber.

Hudson Labs' language model-driven features

Even though we’re still a young company (3 years old), our models have been constantly upgraded as we make in-house research advances and adopt the latest tools and techniques being developed in the field.

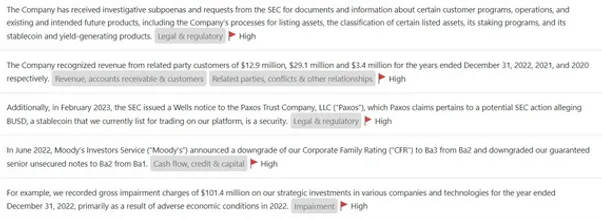

Our first generation models, launched in 2021, facilitate our ‘core capability’ - separating out inconsequential boilerplate from meaningful information in SEC filings, even when they are linguistically similar, and solving the information overload problem by extracting 20-30 key red flags from a 100 page document like a 10-K (annual report).

A red flag is defined as an event or an outcome at a company that can be an early indicator of earnings manipulation or fraud, increasing the probability of SEC action. Our premium product, the BFI Risk Score, serves as a measure of the likelihood of fraud or malfeasance.

- Our second generation models, launched in 2022, significantly improved our information ranking capabilities (the ability to determine relative severity of red flags) and facilitate our real-time AI-generated financial news feed. Our automated news feed is not a news aggregator, it combs through SEC filings and other documents in real-time and extracts price-moving information as it’s published.

- The second-generation models also drive our red flag categorization models, which helps categorise our red flags into different topics so that they can be used in calculating aggregate-level statistics. It also serves as the foundation of our ‘Timeline view’ - a chronological display of red flags as they unfold through time.

- Our upcoming third generation models, augmented by GPT-4, facilitate a whole host of exciting features including SEC filing summaries, a finance-specific question answering assistant, as well as better contextualised red flags. We dive into the details below.

We are launching the new features powered by our third generation models in the coming weeks. Never miss a new feature, sign up for our product watchlist and newsletter!

AI-generated SEC filing summaries & company overviews

Securities filings like 10-K annual reports can run into hundreds of pages. Our new feature generates a comprehensive summary of all the price-moving information contained in a filing. The content in the summary is categorised and can be filtered, so you can only focus on content related to mergers and acquisitions, for example, or only red flags. Again, the content in the summary comes with citations, and simply clicking on each sentence will take you to the position of the original content in the filing that contains the ground truth. This assures you are always accessing accurate and complete information.

GPT-4 or Bing Chat can’t provide good summaries because they do not have the embedded financial knowledge to determine what part is important or what is not.

The ‘context window’ for GPT-4 is simply not large enough to demonstrate all the nuances of what makes something important enough to be added to a summary. The Bedrock AI advantage is that we have our in-house models that do exactly that!

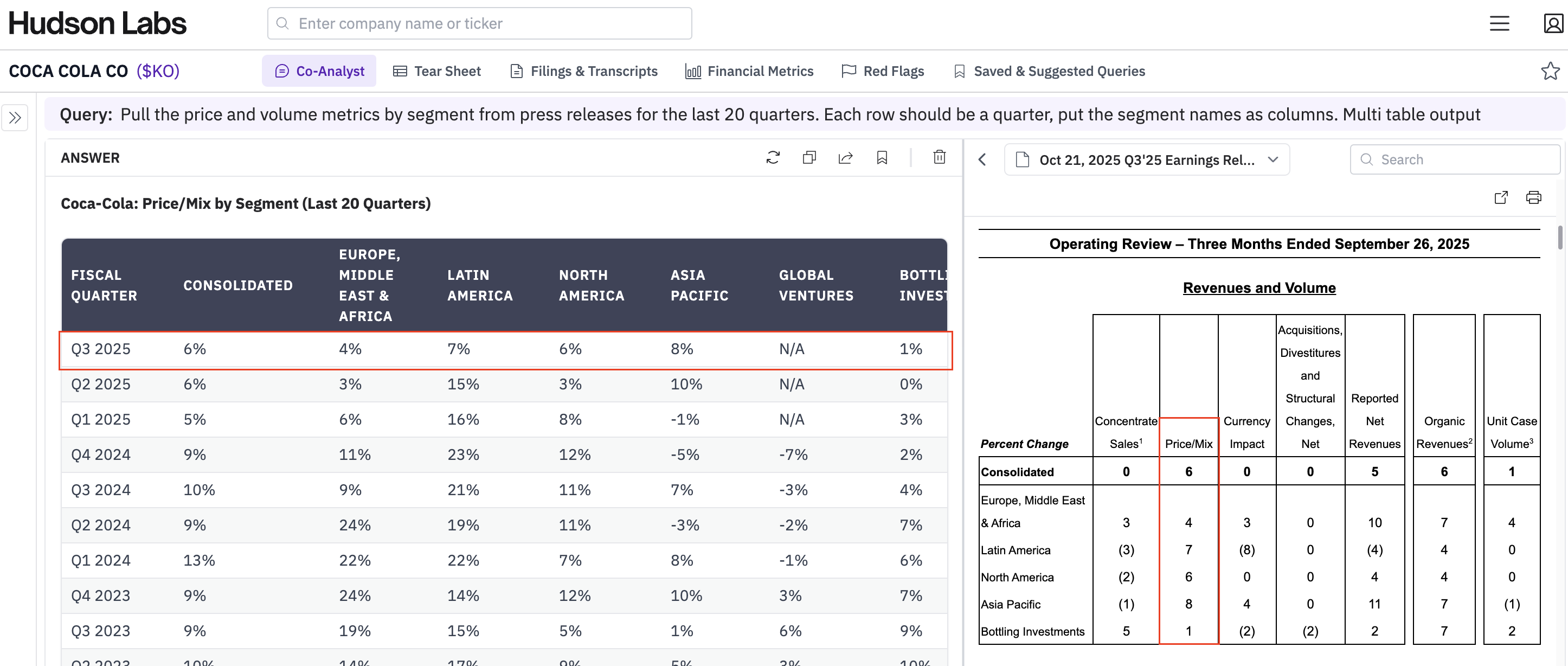

Financial Question Answering

The chatbot paradigm is currently in vogue, fuelled by BingChat and ChatGPT. The conversational paradigm is a more natural model for interaction with computer systems. We are therefore delighted to announce our financial question answering assistant that can answer a wide variety of questions about U.S public companies.

No, this is not a glorified front-end to ChatGPT/GPT-4. We leverage GPT-4 to produce a grammatical and coherent answer in a structured and readable form, but it is never used to answer any financial question. That is handled by our own more reliable models. The key advantage of our question answering system lies in its ability to answer aggregation level queries - for example, ‘what percentage of mid cap companies have had a CFO resignation’, in addition to simpler queries like ‘list all the risk factors in Apple’s latest 10-K’.

This capability is currently not available anywhere and we predict this will be a huge value add to analyst workflows. Questions can be asked at the global level, the market cap group and sector level, company level, filing level, or filing section level (e.g. MD&A only).

Contextualized Red Flags

Currently, Hudson Labs' red flags are displayed verbatim as they appear in SEC filings. While this guarantees factualness, it is not ideal because…

- Financial text is long-winded and contains legalese that is cumbersome to read.

- Individual sentences may not necessarily contain the entire context needed to put the red flag into perspective.

Our new feature rephrases red flags so that they contain more context and are readable in plain English. This reduces the time needed to process through them. Clicking on the flag opens a popup that displays the position of the original sentence in the filing, thus ensuring instant factual verification.

This feature will be available in our news feed, timeline view, and watchlist email notifications.

Comparison of Hudson Labs models to BloombergGPT

While a direct comparison to BloombergGPT is impossible since the model is not publicly available, here are a few points to note:

- BloombergGPT reports training their models on 3k+ SEC filings. Hudson Labs has completed pre-training on 200k+ SEC filings, 65 times more than BloombergGPT.

- Hudson Labs models are instruction-tuned which means they are tuned specifically to respond to the kind of queries that we want it to respond to. Bloomberg’s paper doesn’t mention instruction-tuning yet.

- Bloomberg models are probably going to be better at interpreting financial metrics and dealing with numbers (neither of which is part of Bedrock’s focus)

Overall, it is an exciting time to be in the world of AI and Finance. At Hudson Labs, our small but fast-moving team has been at the forefront of the financial language model space for several years now, and we are looking forward to keeping that up with our new features.