As a tool for everyday use, artificial intelligence seemingly emerged out of nowhere. OpenAI released ChatGPT-3.5 and 4 in late 2022 and early 2023 to much excitement. Soon, regular people who knew nothing about data science or machine learning were using these tools.

The excitement spread quickly. Mainstream media coverage obsessed over how AI tools could change everything, especially work. New tools quickly cropped up, promising efficiencies and better results for all, just by typing words into a box. AI made it feel like work routines in virtually every field would be a thing of the past. Suddenly, it was as if we all had some witchcraft at our fingertips. As our collective imagination was unlocked, the hype around AI also grew. But what also began to come into view was our misunderstanding of what AI could actually do versus what we wanted it to do.

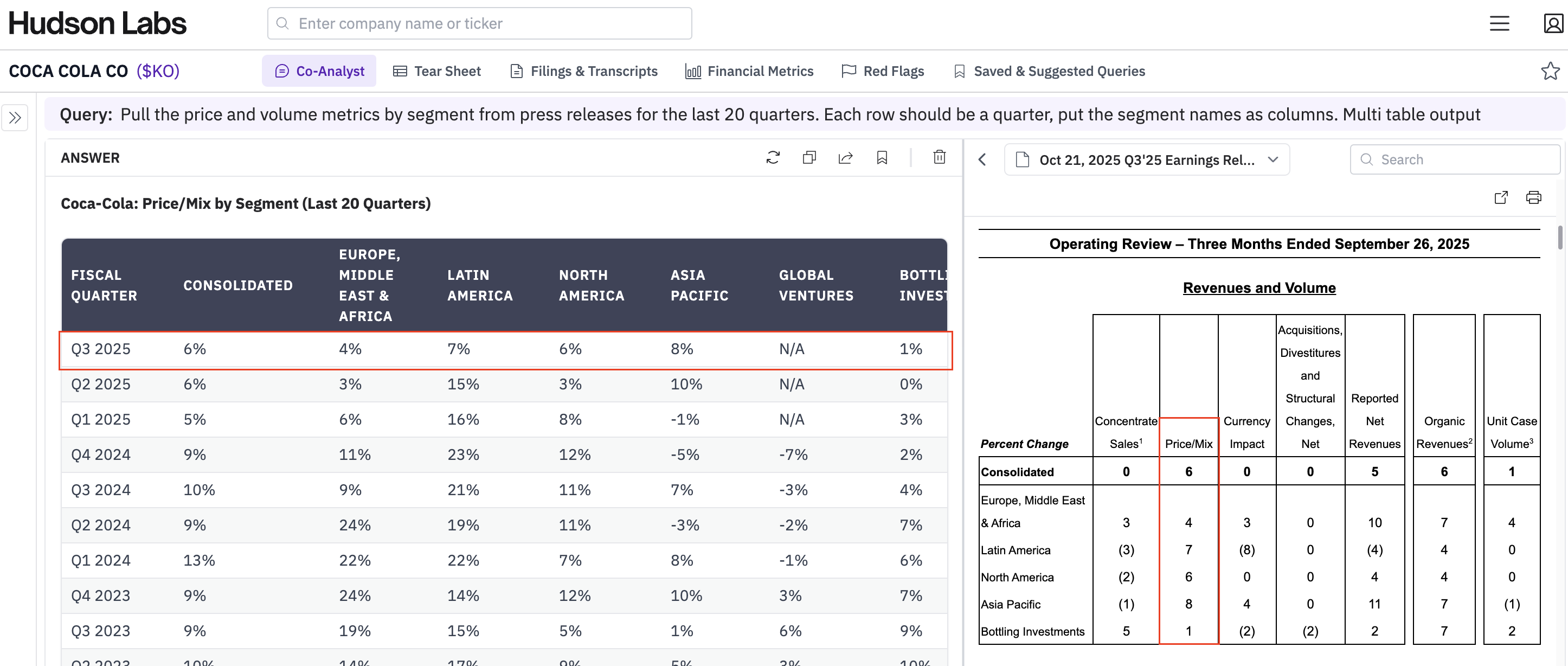

For modern finance and particularly equity research, many people saw generative AI tools as an edge they could use in their investing strategy. And, yes, AI chatbots have a role to play in equity research, but there’s a critical difference between our hopes and reality. This post will explain how to apply these tools for the best results.

How ChatGPT is used in equity research right now

Many of the analysts we speak to are experimenting with generative chatbots like ChatGPT, Bing, Bard and finance-specific bots to automate or enhance their research process.

The most common use cases for AI chatbots right now include:

- Generate suggested language for report writing — Asking a generative AI tool to wordsmith your reports to sound polished can be both effective and quick. Generative tools can turn a few bullet points into well-written paragraphs. Make sure you review the final result to make sure the model hasn’t fabricated any “facts” in the process.

- Background info on technologies or sectors — When you’re researching a company or sector for the first time, sometimes it's easier to have a complicated technology explained to you in plain English. ChatGPT and other bots do a good job of conveying complicated topics simply. The primary drawback here, however, is that when the technology is more niche, these explanations are sometimes factually incorrect. Reduce the chance of “hallucination” by copying the text from a reliable source directly into the prompt.

- Summarizing earnings calls and broker reports — Automated summarization is excellent for decreasing your time to insight. In instances where you just need the general gist of a report, a chatbot can helpfully summarize key points. As detailed below, a more robust approach is required for analysts who need a complete and factual summary.

These can all be effective ways to use AI chatbots like ChatGPT or Bard to improve equity research workflows. As we alluded to, however, there are risks to using AI chatbots in equity research, the biggest being that generative models often invent false but plausible information. It’s essential to know the limitations and proceed accordingly.

Generative AI isn’t a magical solution

Where people often get tripped up using generative AI is asking the chatbots to do things they’re not designed to do. What it does well is imitate human language patterns. And because it does this so well, users often treat it as a search engine to get an answer or a database to be queried. This can lead to hilariously (or tragically) wrong results known as hallucinations. In these instances, a generalist chatbot’s response sounds perfectly reasonable and may even provide seemingly credible references to support it. This is especially true in fields like finance, where the amount of credible information on the internet is limited.

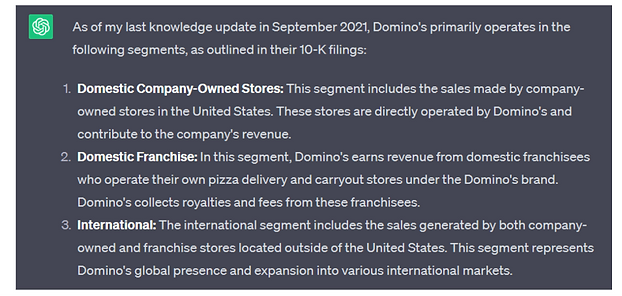

But even more specialized generative bots can get tripped up. For example, in a recent experiment we conducted, a finance-specific chatbot called Hila.ai was prompted with a basic question about Domino’s (DPZ) business segments. Hila gave a very plausible response, but it just so happened to be wrong. Not to mention that it also cited the wrong source.

ChatGPT didn’t fare much better in our experiment. We gave a variety of prompts to ChatGPT-3.5, but the best response it could manage omitted one of Domino’s business segments and split another into two:

For the record, Domino’s three business segments are: 1) US stores, 2) International franchise, and 3) Supply chain.

Hudson Labs' AI-generated company background memo for Domino's identified the business segments correctly and we achieve close to 100% accuracy on business segment identification across all EDGAR issuers.

Here’s a table showing how Hudson Labs' results compared to ChatGPT and Hila for identification of business segments. Learn more about how we performed this comparison here - A Comparison of Generative Chatbots to Hudson Labs:

Hallucinations result in inefficient, time-consuming, and costly additional work. Chatbots prone to errors in equity research will not save you time and will not result in better outcomes.

How Hudson Labs is different

Hudson Labs' approach combines what generative AI models do best — wordsmithing — with our in-house finance-oriented AI models which have been trained on tens of thousands of SEC filings. Filings that contain more than eight million pages of corporate disclosures and financial language, giving our models true domain expertise.

We control our process end-to-end to provide summaries and memos that are factual, credible, and auditable.

This is just one of Hudson Labs' many advantages over generalist chatbots. Here is a summary of some of our other unique advantages:

- Finance-first models — Our in-house models are trained on 8 million pages of financial disclosure and boast better financial and business acumen than generalist models.

- Noise Suppression — Our boilerplate identification model can correctly classify more than 99 percent of sentences as boilerplate or not. Less boilerplate reduces noise and improves the quality of our input data (and, therefore, the quality of the output).

- Ranking — For data-oriented applications, the adage is “garbage in, garbage out.” We rank information for relevance to an equity analyst, ensuring we feed high-quality inputs to our models.

The combination of noise suppression and ranking means we're able to get more relevant information into the models "context window" i.e. the prompt. This also reduces opportunities for confusing the model. Our finance-specific models have better reasoning capabilities.

The primary way that we're different from chatbots is that we keep control of the entire process, which means we can ensure that we never put our models in a position where they're likely to fail.

Frustrated by getting incorrect results every time you ask ChatGPT about business segments or even basic background info on a public company?

See Hudson Labs in action - book a demo