We conducted a structured reliability test of the Hudson Labs Co-Analyst and other popular models across 25 complex financial queries with high precision requirements. In other words, we tested AI systems in situations where failure is both the most common and highest impact.

Each query required locating figures across several reporting periods—often spanning multiple years of SEC filings, earnings releases, and transcripts.

The Co-Analyst produced zero hallucinations in 25 out of 25 queries. It did not fabricate, estimate, or interpolate missing values. Every reported figure was grounded in primary source disclosures.

For comparison, Perplexity Pro got at least one thing wrong in every single answer across the 25 queries.

Creating a Test for AI in Finance

We selected 25 U.S. publicly traded companies at random, with market capitalizations between $1B and $300B. For each company, we selected a reported metric or KPI that is not presented directly on the face of the financial statements.

By selecting companies outside of the top 50 and using non-standard metrics, we avoided testing retrieval of information that is readily available in model memory. By doing so, we ensured that we were testing the model’s ability to process new information. This is important because in real life, most finance tasks require real-time inference and cannot rely on model memory.

Why this test?

Generalist AI systems frequently and most obviously break down when asked to perform multi-period high-precision financial data collection. If the financial data is wrong, the rest of the AI analysis or human analysis will be flawed.

High-fidelity, precise financial data collation is both one of the most important and the most difficult AI tasks in finance.

Query design

We created 25 queries representative of common institutional research tasks. Each query required:

- Retrieval from primary disclosures (press releases, SEC filings or call transcripts)

- Alignment and prioritization of conflicting information across documents

- Extraction across multiple reporting periods

- Assembly of structured time series

Examples included: Multi-year revenue by segment, historical share repurchase activity, time-series guidance disclosures, company-specific KPIs reported across multiple quarters etc.

A full list of queries can be found in the appendix.

Why not an existing benchmark?

Most financial benchmarks suffer from one of the following failings: Insufficient or no inclusion of multi-document or multi-period queries, no inclusion of guidance identification testing, and over-emphasis of mathematical reasoning.

While mathematical reasoning is an interesting area of research in large language models, Hudson Labs intentionally restricts mathematical inference in order to provide fully auditable outputs to users that can be matched with the source. Mathematical steps are often better performed as part of a deterministic step.

How to recreate this test

All questions and answers are included in Appendix I.

Why Multi-Period & Multi-Document Testing Matters in Financial AI

It’s easy to get satisfactory results from virtually any AI model when the task involves summarizing a short or simple document like a single earnings call transcript. But as the number of documents increases, precision decreases.

Even reasoning systems struggle with recall when large volumes of data must be processed. When more than two earnings call transcripts (or similar disclosures) are involved, results often degrade.

If you’ve asked GPT-5 or NotebookLM to extract specifics from a prospectus or perform a 10-K redline, you have likely encountered this issue.

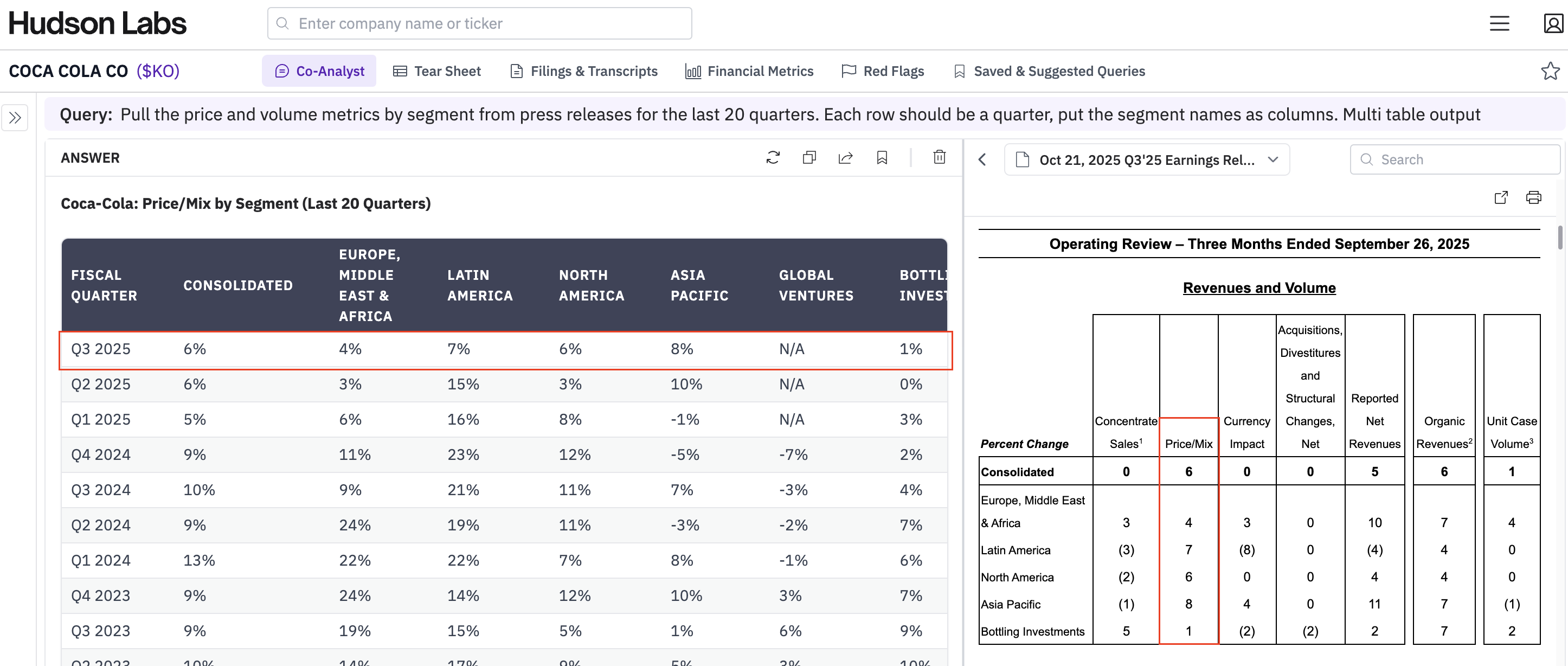

Below is an example where we asked GPT-5 to retrieve all store-related metrics for American Eagle (i.e. the reported results for the total of physical American Eagle stores and stores opened or closed etc.). Investors look at retail store growth and decline when assessing operational performance but this operating metric is not reported on the financial statements and therefore less standardized.

GPT-5: American Eagle Store KPIs for the last 2 years

GPT-5 used the correct sources, and the figures initially looked plausible. In fact, some values appear slightly more realistic than the company’s actual reported “stores ended” figures because the GPT-5 figures were extrapolated and therefore smoothed.

However, after 6.5 minutes of reasoning, GPT-5 returned incorrect store counts for Q4’24, Q1’24, and Q3’23.

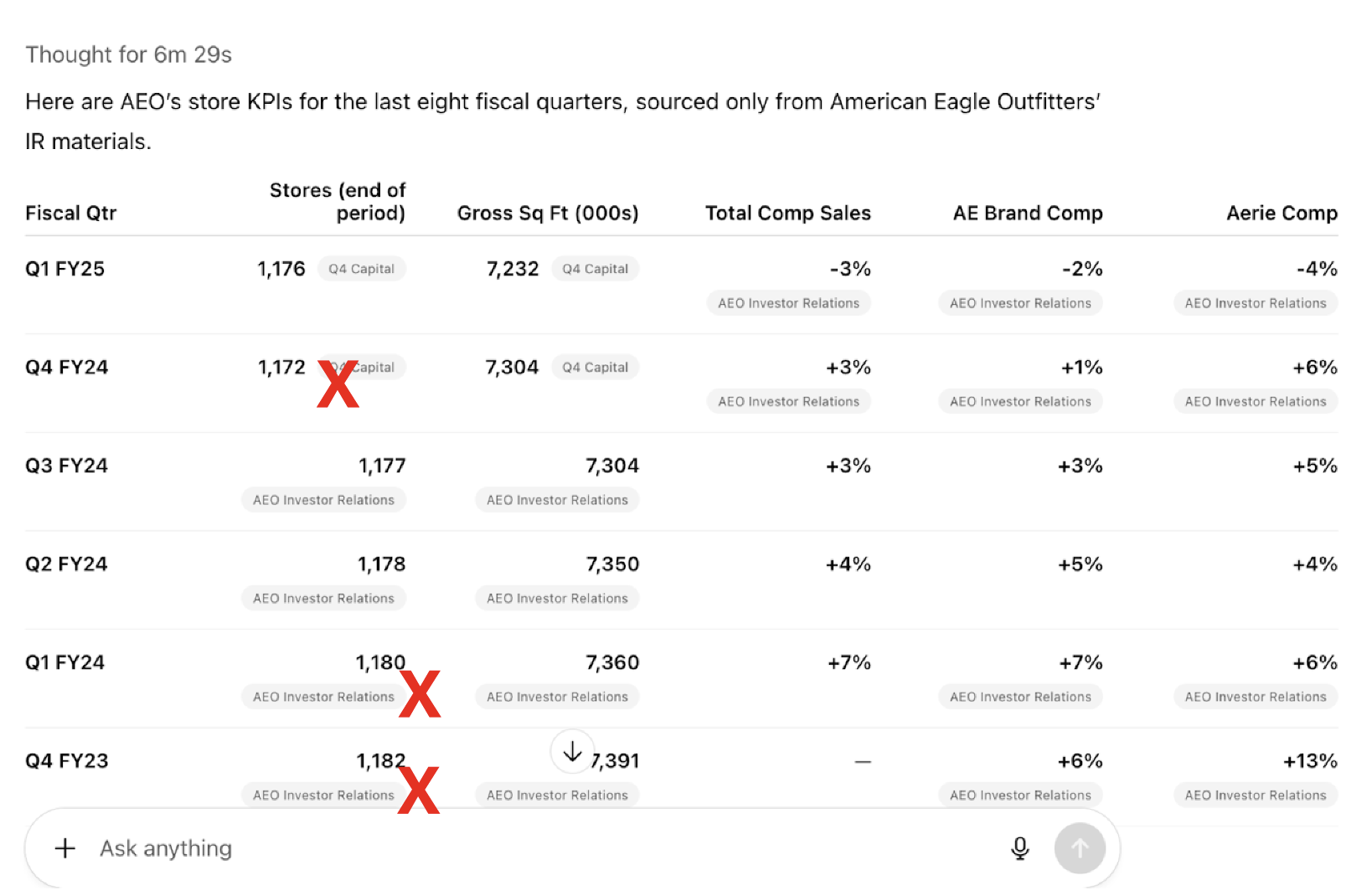

In comparison, the Hudson Labs Co-Analyst answer was completely correct. Equally important, the Co-Analyst surfaced seven additional relevant store-related metrics that GPT-5 neglected to provide.

GPT-5 produced only End of Period Stores and Gross Square Feet, while Hudson Labs extracted the full set of store-related metrics. This is driven by Hudson Labs’ proprietary relevance ranking technology, described here: [link]

Co-Analyst: American Eagle Store KPIs for the last 2 years

Comparison to Perplexity Pro & GPT-5

In institutional workflows, reliability is binary — a single incorrect number can invalidate the entire analysis. To assess the reliability of the Co-Analyst and compare it to popular models, we used a strict scoring system that reflects this reality.

Scoring Framework

- 1 point — Fully correct and complete extraction

- 0 points — Any error, omission, hallucination, or inferred value

No partial credit was awarded.

The Co-Analyst was required to extract only disclosed values. If data was unavailable, it was required to state that explicitly rather than estimate.

Perplexity Pro and GPT-5 received identical queries, with an additional instruction in every prompt specifying which high-quality sources to use. This additional instruction is not necessary for the Co-Analyst. The test was ran September 22, 2025.

*Errors were limited to omissions (NA where information existed elsewhere).

It is worth noting that without detailed source-selection instructions, GPT-5 performed significantly worse. Without source guidance, GPT-5 produced only one fully correct answer out of 25. This is an important observation because in common use, most people do not specify the source documents to use in their prompts. Software solutions that use OpenAI wrappers also often do not invest in robust source selection. This means that in reality GPT-5 results often look more like the second column than the first.

Both GPT-5 and Perplexity produced multiple instances of hallucinated, inferred, or incorrect figures. Hudson Labs was the only platform in the test with zero hallucinations or inferred values.

Co-Analyst Test Results

Across all 25 queries, the Co-Analyst produced zero hallucinations:

- No fabricated figures

- No inferred values

- No interpolated or trend-based estimates

- No synthetic completions of missing periods

When data was not disclosed, the system stated that explicitly.

In addition to hallucination testing, we measured completeness. Out of 25 multi-period queries:

- The Co-Analyst delivered fully correct and complete results in 21 cases

- The remaining errors were limited to omitted figures only

In all 25 cases, no fabricated or estimated values were introduced. In every case of omission, only one of many periods was missed.

This distinction is important: omitted figures are obvious and can be easily rectified through manual follow-up, while fabricated values are almost impossible to identify. Fabricated figures often look more realistic than reported figures because AI excels at extrapolation.

Conclusion

This test demonstrates that while generalist AI models perform satisfactorily in specific low-complexity situations (e.g., summarizing a single earnings call), a more robust architectural solution is crucial for reliable performance on multi-period and multi-document tasks. A simplistic wrapper-style approach cannot meet the requirements of institutional finance.

This evaluation shows that the Hudson Labs Co-Analyst’s proprietary AI architecture delivers results that meet institutional standards:

- Zero hallucinations across 25 complex, multi-period financial queries

It reliably:

- Retrieves primary disclosures

- Extracts structured time-series data

- Avoids interpolation or inference

- Grounds all outputs in authoritative sources

Hudson Labs is proud to be a leader in finance-first large language model research since 2019. Our proprietary architecture continues to outperform large labs in high-precision financial AI.